Dec 5, 2025

Introduction to Language Models for Beginners

Language models power many of the tools you use every day, even if you never think about the technology behind them. When your email suggests the next phrase, when Google answers a question directly, or when an AI assistant responds to your query, a language model is working in the background.

The global natural language processing market was valued at USD 27.6 billion in 2022 and is projected to reach USD 144.9 billion by 2032 (Precedence Research). This rapid growth reflects how essential language models have become across industries, from search and customer support to voice automation and content generation.

This guide breaks down the top language models you should know as a beginner. It keeps the explanations simple, practical, and focused on real-world relevance so you can understand how these systems shape the technology around you.

Key Takeaways

Before you explore the full list of language models, here are the main points you should keep in mind:

Language models help machines understand and generate human-like text.

Modern systems learn from massive datasets to recognize patterns, context, and intent.

Models like GPT, BERT, and LLaMA power many tools you use daily, including chatbots, search engines, and writing assistants.

Each model type has unique strengths such as reasoning, translation, summarization, or sentiment understanding.

Language models are becoming more multimodal and efficient, which makes them accessible to businesses of all sizes.

What Are Language Models?

A language model is an artificial intelligence system that learns how human language works by studying large collections of text. It observes how words relate to one another, how sentences are structured, and how meaning shifts with context. By learning these patterns, the model can predict what word should appear next in a sequence or generate entirely new text that sounds natural.

At a deeper level, a language model goes beyond simple prediction. It learns grammar structures, stylistic patterns, intent cues, and relationships between ideas. This allows it to summarize long documents, interpret layered questions, analyze sentiment, and follow multi-step instructions across different tasks.

There are several types of language models, each designed with different goals and capabilities:

Statistical Models: Early approaches that estimate word probabilities based on frequency and sequence patterns.

Neural Language Models: Models that use neural networks to understand semantic relationships, such as embeddings.

Transformer-Based Models: Modern architectures that use attention mechanisms to understand context across long text spans, powering today’s advanced LLMs.

Multimodal Models: Newer systems that combine text with images, audio, or video for richer understanding.

Because of these abilities, language models have become the foundation for AI-driven systems across search, customer support, content generation, analytics, and voice-based applications.

These capabilities make language models the core layer behind many AI systems that rely on understanding language accurately and responding with context.

Must read: Everything You Need to Know About AI Assistants

Popular Language Models You Should Know as a Beginner

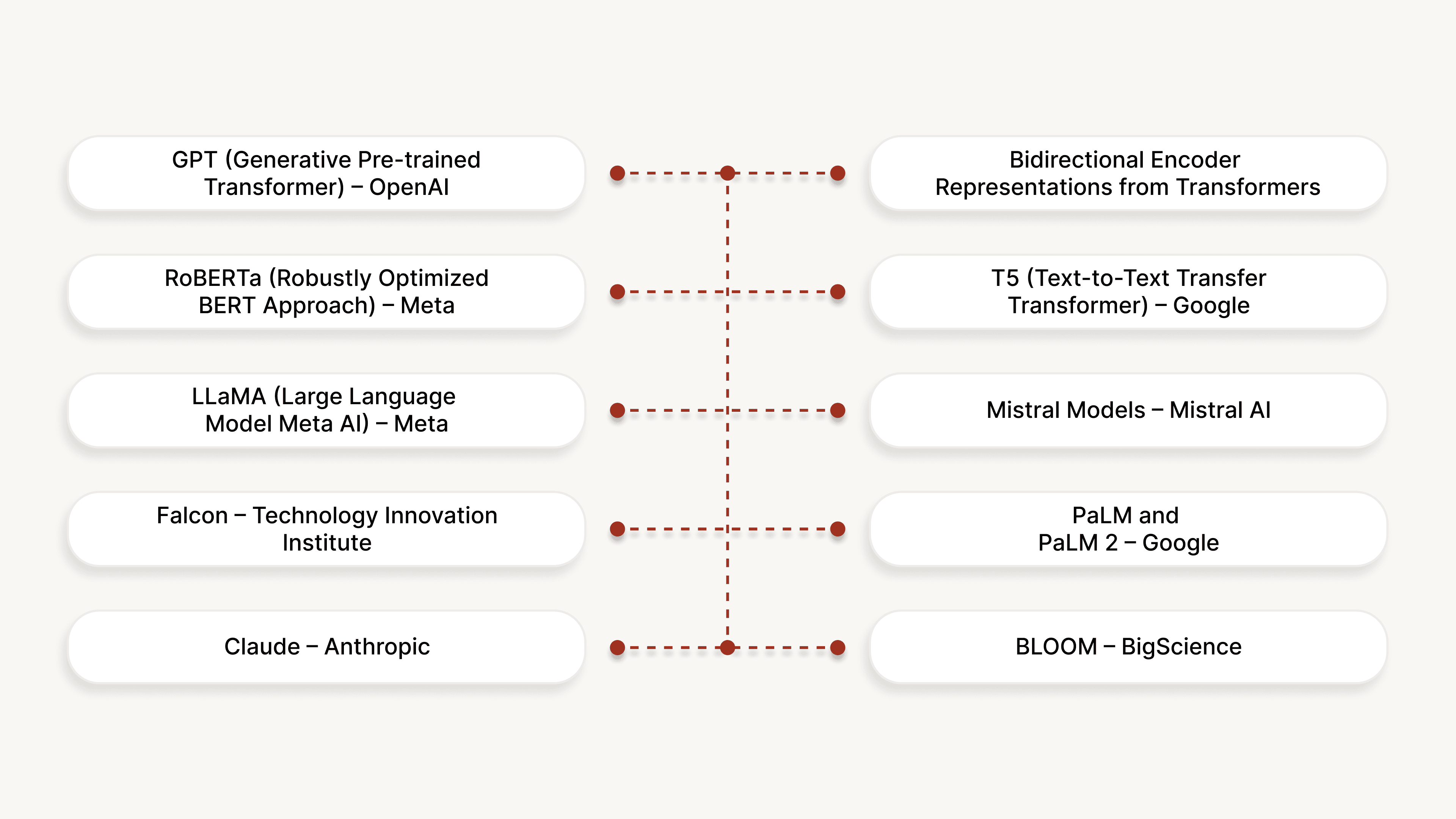

Language models come in many forms, and each one introduces a different approach to understanding or generating text. Here are the most important models shaping today’s AI ecosystem, with practical explanations of what makes each one stand out.

1. GPT (Generative Pre-trained Transformer) – OpenAI

GPT models are built to generate natural and continuous text, making them highly effective for creative writing, question answering, and long-form explanations. Their strength lies in handling extended context, which allows them to maintain coherence across several paragraphs.

Where it is used:

advanced chat assistants

enterprise support bots

long-form content and code generation

GPT is the model family that popularized conversational AI because of its ability to follow instructions and reason across multiple steps.

2. BERT (Bidirectional Encoder Representations from Transformers) – Google

BERT reads text in both directions at once, which gives it a deeper understanding of sentence meaning. Unlike GPT, it is not designed to generate new text but to interpret and classify existing text with high accuracy.

Where it is used:

Google Search ranking

intent classification

document analysis

Its bidirectional design makes it especially strong at identifying intent and recognizing nuanced meanings.

3. RoBERTa (Robustly Optimized BERT Approach) – Meta

RoBERTa improves on BERT by training longer, removing restrictive assumptions, and exposing the model to larger datasets. This results in stronger comprehension and better performance on benchmark language tasks.

Where it is used:

content moderation systems

social media sentiment analysis

compliance and risk scanning

It is preferred in scenarios where precision and robustness matter.

4. T5 (Text-to-Text Transfer Transformer) – Google

T5 reframes every language problem as a text-to-text task. Whether it is translation, summarization, or classification, the input and output are always text. This flexible design allows T5 to adapt to many tasks without specialized architectures.

Where it is used:

rewriting and paraphrasing tools

summarization systems

multi-task learning environments

Its unified approach is one reason researchers favor it for multi-purpose NLP pipelines.

5. LLaMA (Large Language Model Meta AI) – Meta

LLaMA is designed to be efficient and lightweight while still delivering strong performance. It performs well even on modest hardware, which makes it accessible to smaller teams and researchers.

Where it is used:

fine-tuned enterprise assistants

research experiments

low-resource environments

Its open licensing and compact design have made it the default foundation for many custom AI projects.

6. Mistral Models – Mistral AI

Mistral models achieve impressive performance relative to their size. They use optimized architectures that allow them to run faster without compromising accuracy.

Where it is used:

on-device or edge applications

real-time processing systems

enterprise tools that need efficiency

They are popular for tasks that demand high accuracy but cannot rely on large GPUs or cloud-heavy setups.

7. Falcon – Technology Innovation Institute

Falcon models are trained on carefully filtered datasets that emphasize quality and factual reliability. They also support multilingual contexts, making them suitable for diverse language environments.

Where it is used:

multilingual chat systems

document comprehension

research and academic applications

Its strong performance on open benchmarks has made it a respected open-source option.

8. PaLM and PaLM 2 – Google

PaLM models are built for advanced reasoning, code generation, and problem-solving. They rely on extensive training datasets that help them handle tasks requiring logic and structured thinking.

Where it is used:

coding assistants

education and tutoring tools

enterprise productivity applications

Their strength lies in completing tasks that require multi-step reasoning rather than simple pattern matching.

9. Claude – Anthropic

Claude focuses heavily on safety, clarity, and controllability. It can work with extremely long inputs, making it useful for analyzing entire documents or complex instructions in a single pass.

Where it is used:

compliance and policy review

document summarization

enterprise knowledge automation

Its safety-centric design is a differentiator in sensitive environments.

10. BLOOM – BigScience

BLOOM is one of the largest multilingual open-source language models created through global research collaboration. It supports dozens of languages, including many underrepresented ones.

Where it is used:

multilingual applications for global audiences

academic research

culturally diverse content creation

Its transparency-first approach gives researchers full visibility into how the model works.

Together, these models illustrate how different architectures and training approaches can shape real-world AI performance, giving beginners a practical view of the tools driving today’s language intelligence.

Suggested read: What is a Voice Bot and How to Use It?

How Language Models Work

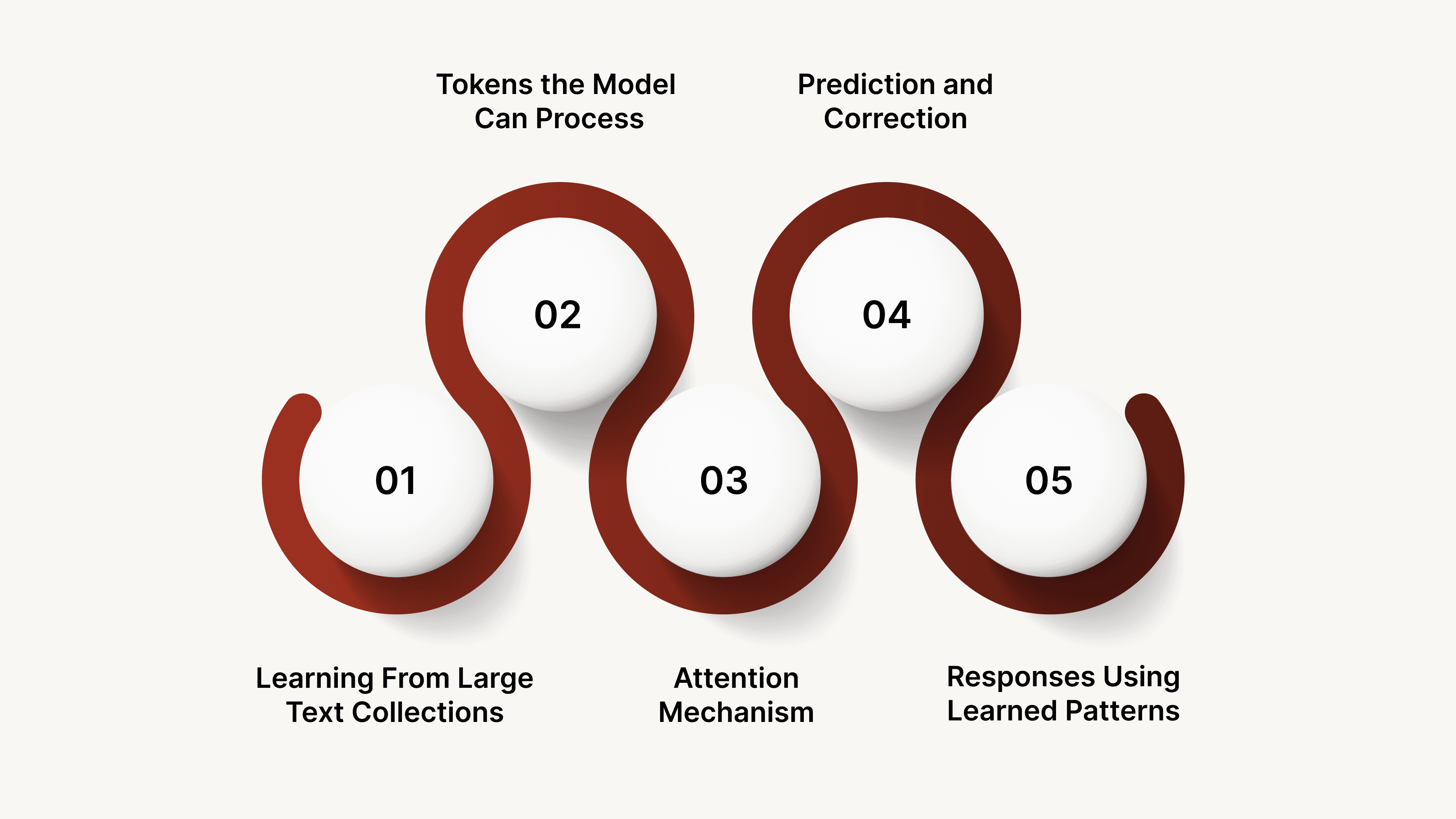

Language models learn to understand and generate text through a series of steps that build their ability to recognize structure, meaning, and intent. Each stage teaches the model something specific about how language behaves in real conversations.

1. Learning From Large Text Collections

A language model begins by scanning huge amounts of text across different topics and writing styles. It does not memorize these sources. Instead, it looks for recurring patterns such as how questions are phrased, how opinions are expressed, or how facts are presented.

This gives the model a general sense of how humans communicate in different contexts.

2. Converting Text Into Tokens the Model Can Process

Humans read entire words, but models work better with smaller pieces called tokens.

For example, “unbelievable” may be split into “un,” “believe,” and “able.”

This helps the model:

understand uncommon or new words

manage long or complex sentences

recognize variations of the same root word

Tokenization is what allows models to deal with grammar and spelling flexibility across languages.

3. Identifying Important Words With the Attention Mechanism

Transformers use attention to decide which parts of the sentence deserve focus.

For example, in the sentence:

“Send the report to the manager who approved the budget,”

The model needs to understand that the manager connects to approved the budget, not to send.

Attention helps the model track these relationships correctly, even when sentences become longer or more detailed.

4. Learning Through Continuous Prediction and Correction

During training, the model repeatedly tries to guess the next token in a sequence. Every incorrect guess adjusts millions of internal weights by tiny amounts. Over time, this helps the model learn:

grammar rules

phrasing patterns

conversational tone

factual relationships

This is the step that allows the model to write new text that feels natural instead of random.

5. Generating Responses Using Learned Patterns

After training, the model uses all the patterns it learned to produce output one token at a time.

The choice for each next token depends on:

the input provided

the previous tokens it has already generated

the patterns it learned during training

This is why the model can answer questions, rewrite text, follow instructions, or provide explanations that are coherent and contextually relevant.

By understanding these steps, you gain a clearer picture of how language models process information and why they can generate responses that feel structured, coherent, and context-aware.

Suggested read: Multilingual Voice Bots for Enhanced Customer Interaction

Where You See Language Models in Real Life

Language models operate behind the scenes in many tools people use every day. They help systems understand intent, interpret complex inputs, and generate accurate responses across different digital experiences.

Virtual Assistants: Assistants like Siri and Alexa use language models to convert spoken audio into text, identify the core action in the request, and map it to the correct function. Instead of responding to fixed commands, the model interprets flexible phrasing such as “Set a reminder for later this evening” and understands the intent behind it.

Customer Support Automation: AI-driven support tools use language models to analyze user messages, detect issue types, and retrieve the most relevant response from internal knowledge systems. This enables automated resolution for tasks like password resets, order checks, and basic troubleshooting without rigid, rule-based scripts.

Document Summaries and Transcriptions: Models process long recordings or reports by identifying topic transitions, important details, and non-essential filler content. They then condense the material into summaries that preserve meaning without manual review. This is valuable for industries that handle operational logs, compliance reports, or patient records.

Search and Recommendation Systems: Modern search engines rely on language models to understand queries in natural language. Instead of matching keywords, they infer intent and context. Similarly, recommendation engines use models to interpret item descriptions, user reviews, and preferences to deliver suggestions that align with user behavior.

Code Assistance: Developer tools trained on software repositories use language models to understand coding patterns, suggest improvements, and generate working code from plain language descriptions. This accelerates development and reduces repetitive tasks for engineering teams.

These examples show how deeply embedded language models are in everyday digital experiences, even when users are not consciously aware of the technology working behind the scenes.

Benefits and Limits of Language Models

Language models offer advanced language understanding capabilities, though they also introduce certain challenges depending on how they are used.

Benefits

They interpret multi-layered inputs by capturing relationships between sentences, which helps systems understand complex instructions rather than isolated keywords.

A single model can handle tasks such as rewriting, extracting information, generating drafts, or analyzing tone, reducing the need for multiple specialized systems.

Modern language models support many languages and dialects, enabling cross-regional applications and more inclusive user experiences.

Limits

Their performance depends heavily on the coverage and balance of the training data. Missing or skewed data can lead to weak responses in niche topics.

They operate on statistical patterns, not real understanding, which means they may generate confident but inaccurate responses for unfamiliar or ambiguous inputs.

Running advanced models can require considerable computational resources, which increases infrastructure costs for teams working at scale.

Recognizing both strengths and limitations helps you evaluate where language models add genuine value and where careful oversight is still necessary.

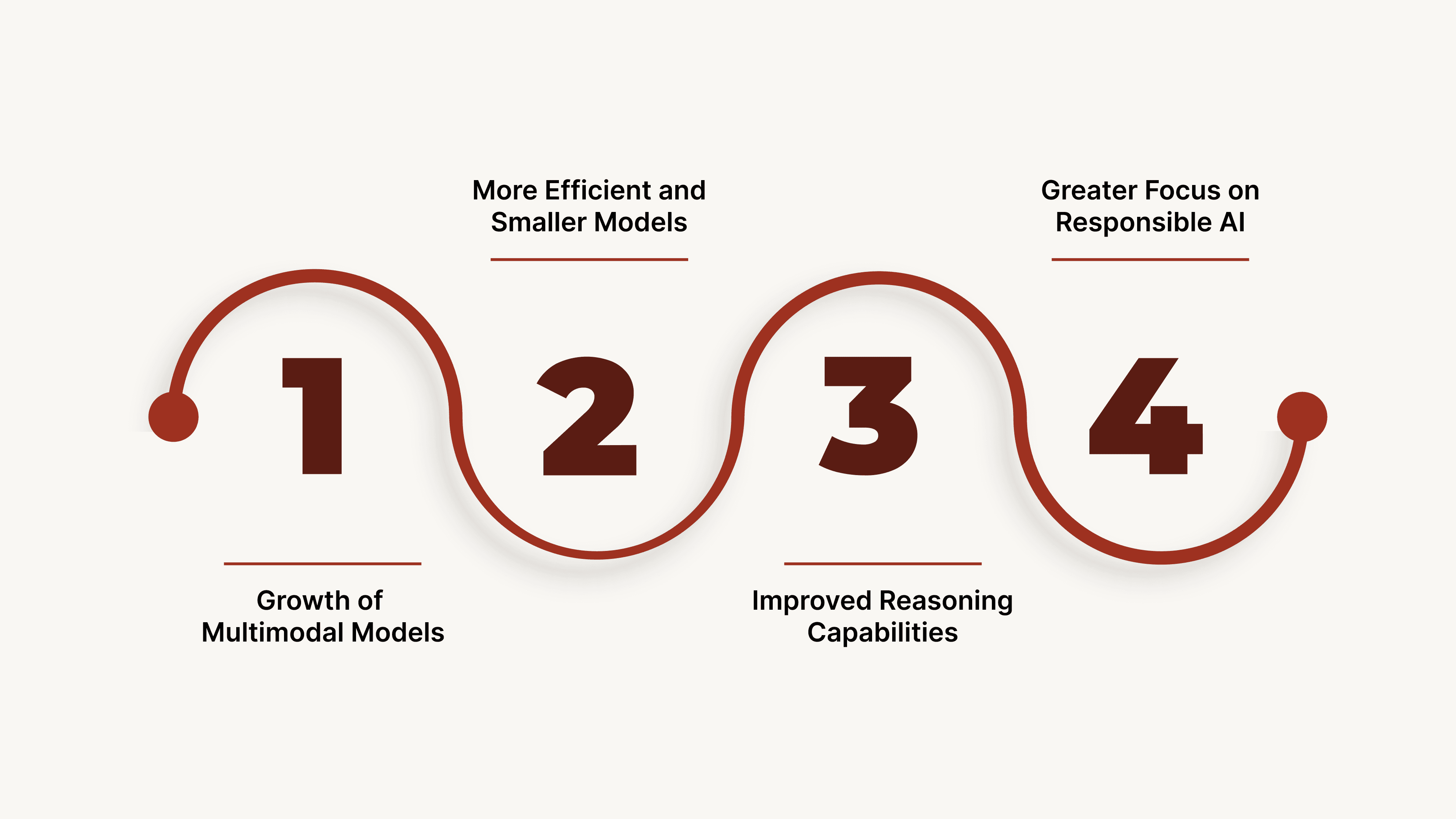

The Future of Language Models

Language models are evolving quickly, and the next generation is focusing on improved understanding, efficiency, and the ability to work across different types of information. These developments will make AI tools more capable and accessible for both individuals and businesses.

Growth of Multimodal Models: Newer models can understand text alongside images, audio, and video, making it possible to perform tasks that require multiple types of input.

More Efficient and Smaller Models: Research is shifting toward compact models that deliver strong performance without heavy hardware, enabling more on-device and offline AI experiences.

Improved Reasoning Capabilities: Future models aim to follow logical steps more reliably, handle structured tasks, and solve problems that require multiple layers of reasoning.

Greater Focus on Responsible AI: Developers are prioritizing transparency and safety through better data filtering, improved factual grounding, and clearer control mechanisms.

These advancements indicate a future where language models become more adaptable, more transparent, and more aligned with real-world tasks across industries.

How CubeRoot Uses Language Models in Voice AI

CubeRoot integrates language models into the core of its Voice AI engine to deliver accurate, context-aware conversations across high-volume enterprise workflows.

Intent Recognition

The system identifies verbs, entities, and key phrases in a caller’s speech and maps them to predefined workflows. This ensures the agent correctly interprets requests like rescheduling, claim checks, delivery updates, or account inquiries without relying on fixed phrases.

Structured Call Summaries

Instead of generating free-form text, CubeRoot uses language models to produce structured summaries with fields such as issue category, resolution outcome, and required follow-up. These summaries sync directly into CRMs and reduce manual review time for teams.

Sentiment Interpretation

Models detect cues such as frustration, urgency, or confusion in a caller’s speech. This allows the system to adjust tone, escalate to a human agent, or switch to a more guided script when sensitivity is required.

Multilingual Support

CubeRoot applies language models to handle multilingual and code-mixed conversations common in India. The system interprets shifts between English and regional languages and generates responses that sound natural to the caller.

Smarter Workflow Decisioning

Language models classify statements, extract required information, and validate details in real time. This supports automated decisioning in workflows such as policy lookups, claim verification, appointment checks, or order rescheduling.

By embedding these capabilities into its Voice AI workflows, CubeRoot strengthens automation quality and supports large-scale conversations with clarity and consistency.

Learn more about Automated Calling System for Businesses

Conclusion

Language models have moved from experimental research tools to core infrastructure that shapes how people interact with software. They influence how information is searched, summarized, verified, and even created. For beginners, understanding these models is no longer optional. It is the foundation for understanding how modern AI systems read, interpret, and respond to human input.

The rapid shift toward multimodal and smaller yet high-performing models shows how quickly the field is maturing. Models are becoming more capable of connecting details across long passages, handling mixed languages, and applying structured reasoning to real tasks. At the same time, newer architectures are reducing the cost and hardware demands that once limited experimentation.

Learning how language models work gives you clarity on what these systems can do well, where they fail, and how they fit into larger workflows. It also helps you understand the principles behind many AI applications you use daily, from smarter search to automated content generation. As research progresses, language models will continue to reshape how information is processed, how decisions are supported, and how digital systems communicate with people.

Explore how CubeRoot uses LLMs to power enterprise-grade Voice AI solutions. Book a demo to see it in action.

Frequently Asked Questions

Q: What is a language model?

A: A language model is an AI system trained on large collections of text to understand patterns in language. It predicts the next word in a sequence, which enables it to generate text, interpret queries, summarize content, and support conversational tasks.

Q: What makes a large language model different from a regular language model?

A: Large language models are trained on significantly bigger datasets and contain far more parameters. This scale helps them understand context better, handle multiple tasks, and generate more accurate, natural-sounding responses.

Q: Why are language models important for businesses?

A: Language models allow systems to interpret customer text or voice, automate conversational workflows, summarise structured or unstructured content, and handle multilingual or code-mixed communication. This capability drives better responsiveness and efficiency at scale.

Q: What are the main limitations I should know about?

A: Key limitations include:

Models only learn from the data they are trained on, so gaps or biases in the dataset can affect performance.

They recognise patterns, but do not truly understand meaning, which can lead to plausible but incorrect responses.

Many large models require significant compute resources, making them costlier to train or deploy effectively.

Q: Can language models work across different Indian languages or dialects?

Yes. Many modern models are trained on multilingual datasets or can be fine-tuned for specific languages. For Indian context, code-mixing (switching between Hindi/English or regional languages) is becoming common; models that support this boost localization and reach in Tier II/III markets.

Q: How can I start using a language model in my workflow?

A: Begin by identifying a high-volume, repetitive communication task in your business (for example, appointment reminders, customer voice queries). Choose a model suited to that task (e.g., one fine-tuned for voice or multilingual data). Integrate via APIs or low-code platforms. Monitor for accuracy, tone, and escalation triggers. Then scale once you validate performance.